- AI Exec

- Posts

- Meta is spending $110 billion on AI

Meta is spending $110 billion on AI

It’s now clear this spending is committed, not speculative

👋 Good morning/evening (wherever you are). It’s Friday.

Today, Meta confirmed it is investing $110 billion this year in AI infrastructure and R&D, a massive bet in the global AI race.

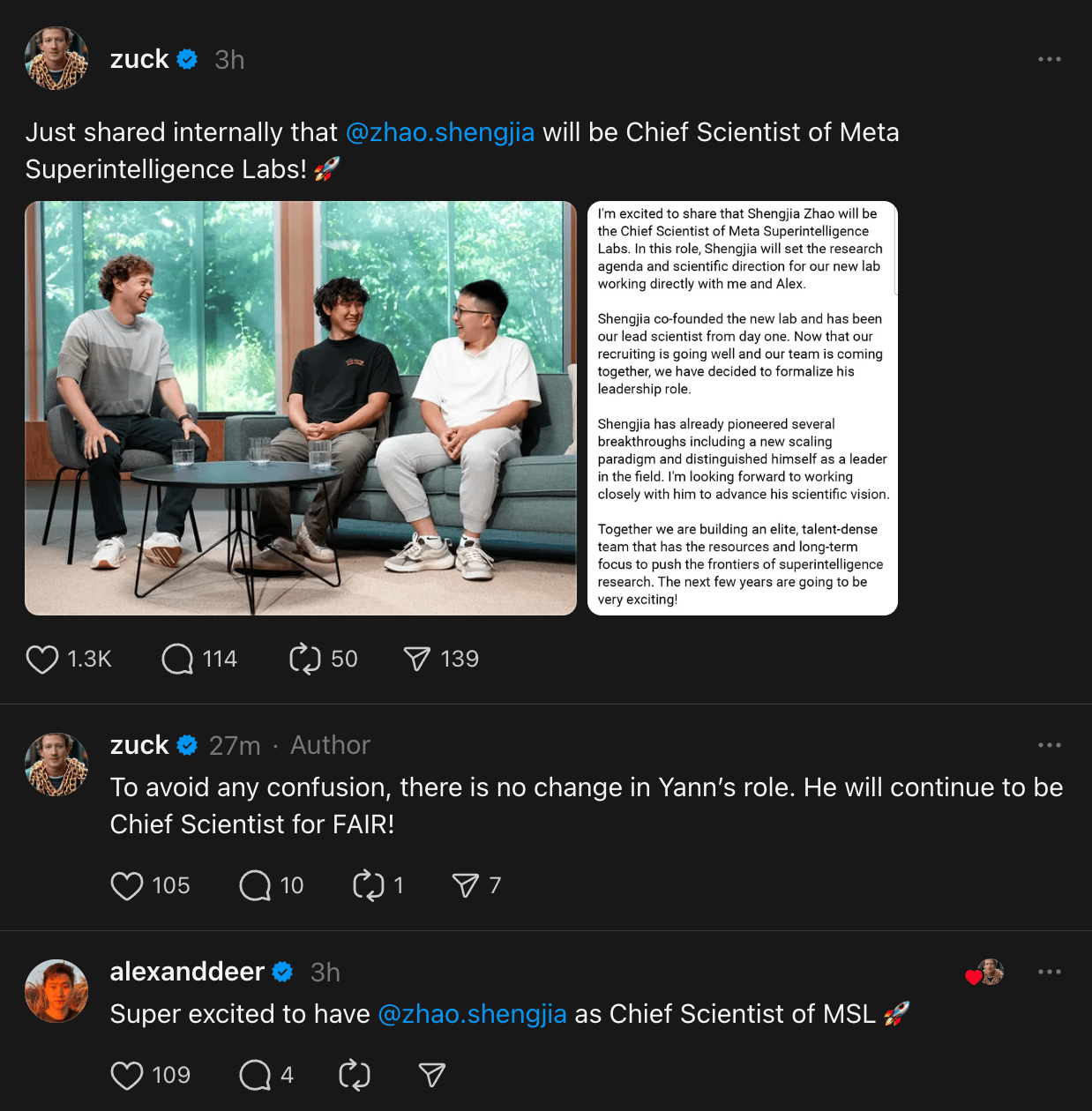

Alongside this huge investment, Meta made an important leadership announcement.

Shengjia Zhao is now officially Chief Scientist of Meta Superintelligence Labs (MSL). He co-founded the lab and has already led major breakthroughs, including a new scaling paradigm. His role is now formalized as MSL intensifies its focus on superintelligence research with a small, specialized team.

Zuck also made it clear that Yann LeCun remains Chief Scientist at FAIR, Meta’s original AI research division.

Source: @zuck on Threads

OK let’s keep going ↓

🔥 Creative pulse

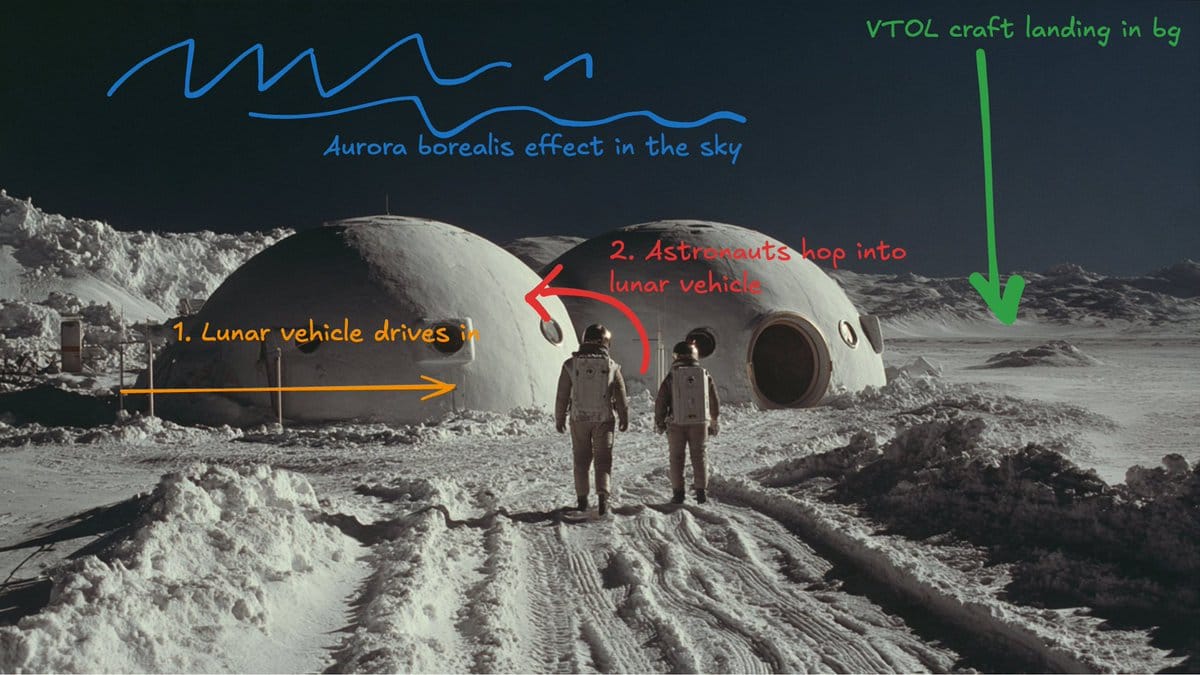

A new trend is emerging around Veo 3’s visual annotation feature.

People are starting to sketch their ideas directly on an image, using the "frames to video" feature in Google Flow to bring them to life.

In the example below, the image started in Midjourney and then Veo 3 (within Google Flow) added motion.

My quick thoughts on this: Creatives who are used to sketching on iPads will have a lot of fun with this.

In the long run, voice will be the next frontier. Meaning that you’ll direct scenes by simply speaking…literally speaking things into existence.

More on that soon.

Source: @bilawalsidhu on X

Google just discovered a powerful emergent capability in Veo 3 - visually annotate your instructions on the start frame, and Veo just does it for you!

Instead of iterating endlessly on the perfect prompt, defining complex spatial relationships in words, you can just draw it out

— Bilawal Sidhu (@bilawalsidhu)

8:33 PM • Jul 25, 2025

My top 2 video posts on LinkedIn this week, in case you missed them:

Matt Zien’s latest masterpiece, LAST CALL BEFORE AGI

JSON prompts are all the rage (some hate it, some love it). Watch the Veo 3 Tesla video, and copy the exact prompt.

PS: I’m launching an AI creative community in less than 6 days. I’ll share the link here first. Keep an eye out.

💡 Here’s what you should know

Google is testing a vibe-coding app

Opal lets users generate and remix web apps through simple text prompts and editable visual workflows.

Runway introduces a new in-context video model

Aleph can edit and transform video with prompts, enabling object changes, angle shifts, and full scene generation.

Claude Code adds support for specialized sub agents

Developers can now create task-specific AI agents with their own tools, prompts, and context windows for focused workflows.

Goldman Sachs employees now write 1 million AI prompts a month

The bank has rolled out generative AI tools across its 46,000-person workforce, with agents like Devin acting as team members.

Microsoft tests Copilot Appearance

A new experimental feature lets select users in the US, UK, and Canada interact with Copilot using live voice, facial expressions, and conversational memory.

Pony.ai launches 24/7 robotaxis in China

The company’s autonomous fleet now operates all day in Guangzhou and Shenzhen, with advanced sensors enabling safe navigation even at night.

💰 The numbers

Anthropic in talks with Middle East backers, targeting $150B+ valuation.

ServiceNow signs $1.2B cloud deal with Google, marking another major win for GCP after OpenAI and Salesforce.

5C secured $835M to expand its modular AI data centers across North America, in a round led by Brookfield and Deutsche Bank.

🧠 Thought starters

Unitree just launched the R1, a lightweight humanoid robot starting at $5,900.

It weighs only 25 kg (about 55 lbs) and is built for everyday interaction using voice and vision AI.

😂 Meme of the day

Thank you for your interest in Astronomer.

— Astronomer (@astronomerio)

11:39 PM • Jul 25, 2025

Thanks for reading,

Eddie

P.S. If this was valuable, forward it to a friend. If you’re that smart friend, subscribe here.

P.P.S. Interested in reaching other ambitious readers like you? To become a sponsor, reply to this email.